AI Translate

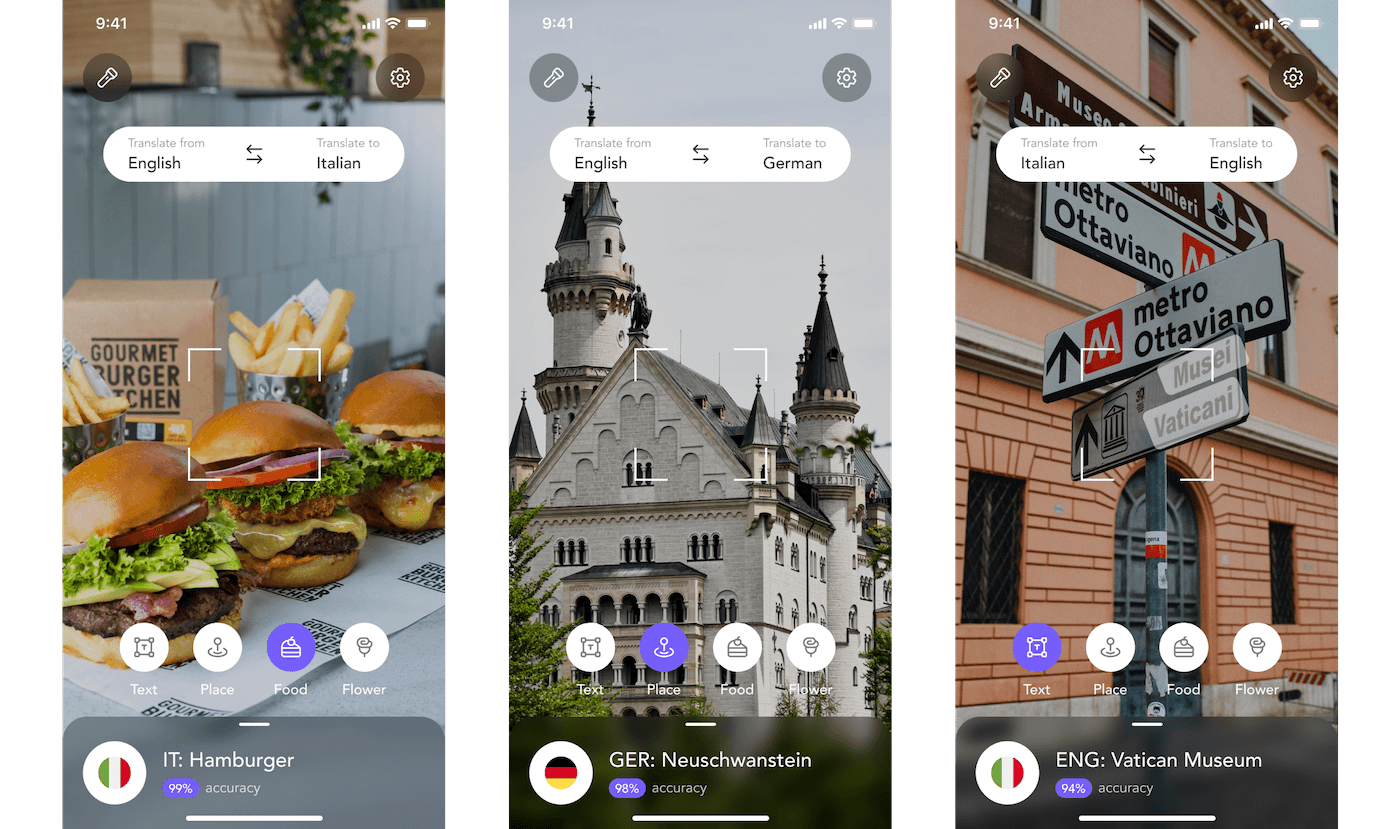

Frame Sixty introduces AI Translate, an app that harnesses Apple’s AI to provide advanced object and text recognition, translating over 100 languages with voice and text options. This versatile tool is perfect for travelers, language learners, and those with visual impairments, offering instant translations and identifications to enhance understanding of any environment. With just your phone’s camera, AI Translate transforms foreign scenes into comprehensive experiences, making it an essential companion for anyone eager to explore the world or learn a new language with ease.

- iOS app

- Backend APIs

- Machine learning

- Object recognition

- AWS

- CoreML iOS

- Swift

- xCode

- Figma

- Create ML

AI Translate harnesses the power of machine learning (ML) to perform object recognition and translates the predictions onto mobile devices. By integrating sophisticated ML models, it accurately identifies objects across various categories and seamlessly translates the recognition results into more than 100 languages. Additionally, the app features advanced text scanning capabilities, enabling users to translate printed or handwritten text instantly.

Problem to Solve

The core problem addressed in this article is the decision-making process between implementing machine learning for a mobile app on the device using Core ML or utilizing a service API like Amazon Machine Learning API. The context is the development of the AITranslate iOS app, focused on identifying objects through the phone’s camera and translating them, with potential applications for travelers and visually impaired users.

Solution

In addressing the challenges of developing an app tailored for frequent travelers, where minimizing internet usage is crucial, we sought a solution that prioritized a seamless user experience. Given the app’s continuous need to analyze camera frames, opting for a remote API posed potential delays and higher server costs, making a local solution more favorable.

However, a new challenge emerged as the app employed multiple machine learning models for various scenarios, each with a substantial weight. Storing all these models locally would burden the app’s size and compromise efficiency. To overcome this hurdle, we devised a solution: downloading machine learning models on demand. This strategic approach ensures that the app remains lightweight, offering users the advantages of local machine learning without compromising on functionality. Now, users can enjoy a responsive and efficient app experience, perfectly suited for their travel needs.

Object recognition with CoreML

The main feature of our app is object recognition. In order to translate the objects around a user into their native language, we first use AI to determine the identify of the object. The process begins with gathering a diverse and comprehensive collection of images that represent the objects the app needs to identify. This collection involves sourcing images from various angles, lighting conditions, and backgrounds to ensure the model can generalize well across real-world scenarios. Careful labeling of these images is critical, as this metadata provides the learning algorithm with the information it needs to understand what it’s looking at during the training phase. Depending on the complexity and the variety of objects, the dataset may range from thousands to millions of images.

Model Training

Once a rich dataset is assembled, the next step is training the Core ML model. This is done by using a convolutional neural network (CNN), a type of deep learning algorithm adept at processing visual information. The training involves feeding the collected images into the CNN, which then learns to identify patterns and features that define each object. The model goes through numerous iterations, each time adjusting its internal parameters to reduce the error between its predictions and the actual labels. This phase is both resource-intensive and time-consuming, often requiring powerful GPUs and substantial time to refine the model to achieve high accuracy and performance.

Model Optimization and Conversion

After training, the model is optimized to run efficiently on mobile devices. This optimization involves pruning the neural network, quantizing weights, and potentially using techniques like Core ML Model Personalization, which allows for continuous learning directly on the device. The trained model is then converted into Core ML format using Core ML tools. This conversion ensures compatibility with iOS devices and enables the use of Apple’s hardware-accelerated Neural Engine, which significantly speeds up inference.

App Integration and Object Recognition

The final step is integrating the Core ML model into the iOS app. This involves writing code to handle real-time image data from the device’s camera and using the Core ML framework to process this data through the model for object recognition. The app processes the camera feed, frames are passed to the Core ML model, which then predicts the objects in real time. These predictions are displayed to the user, often with bounding boxes around the recognized objects and labels indicating what they are. The app needs to be user-friendly and responsive, providing immediate and accurate object recognition to deliver a seamless user experience.

Text identification and translation

A standout feature empowers users to effortlessly point their phone cameras at text, triggering automatic translation into their desired language. This seamless functionality is achieved through the integration of two core services. First is OCR (Optical Character Recognition), a powerful process converting text images into machine-readable formats using advanced machine learning. Following text extraction, our app seamlessly employs another service to translate the content into the user’s specified language. Users have the flexibility to choose the input language for scanned text and define the desired output language for translation. Frame Sixty’s AI Translate not only breaks language barriers but also provides users with unparalleled control over language preferences, combining OCR and translation services for a comprehensive and user-centric language translation solution.

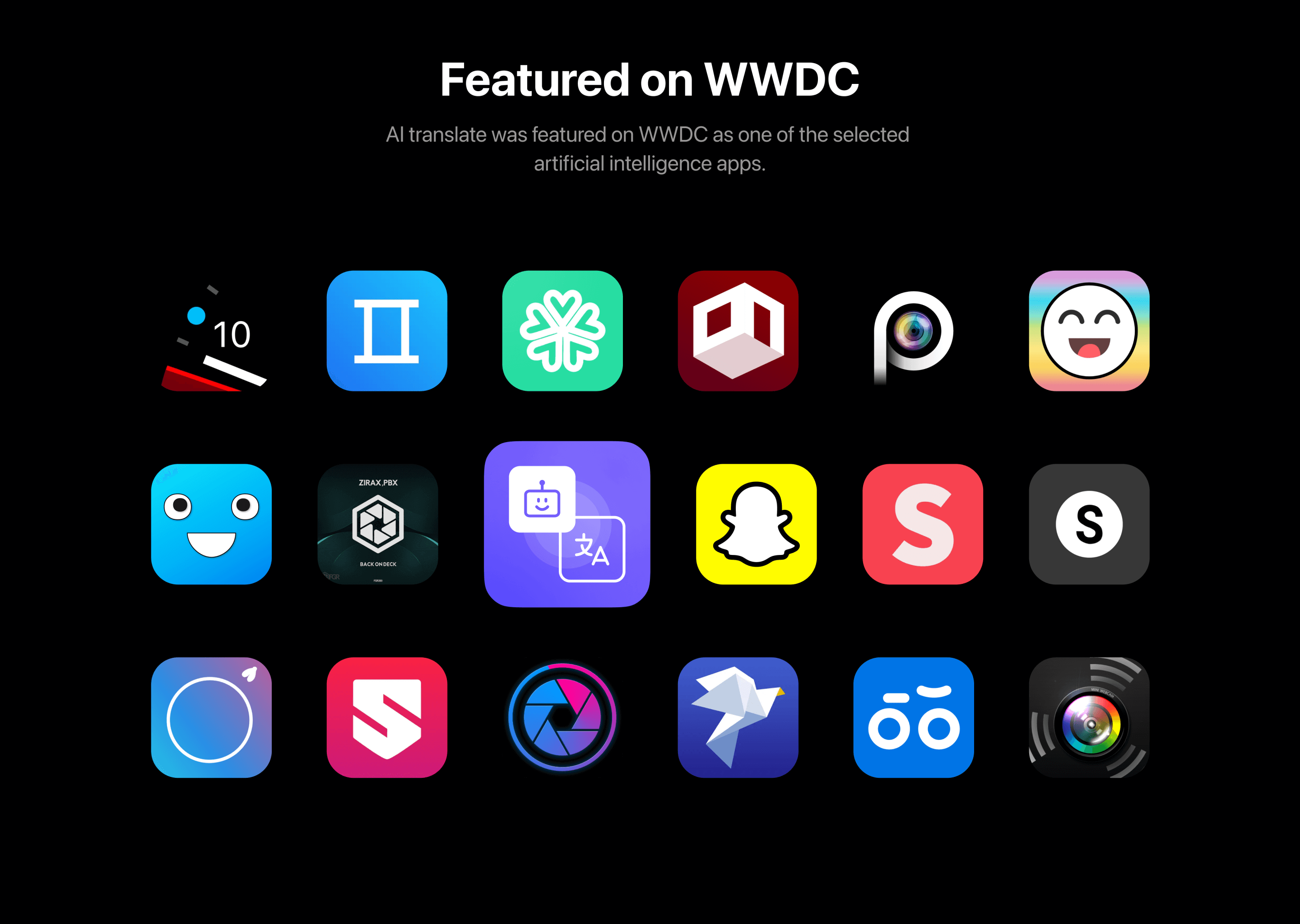

Featured at WWDC

We are elated to share that our innovative app, which translates real-world objects into multiple languages, has been spotlighted at the Worldwide Developers Conference (WWDC) among the top AI applications of the year. This recognition is a significant acknowledgment of our app’s ability to bridge language barriers and enhance global communication. Leveraging advanced Core ML models for instant object recognition and translation, our app stands out as a transformative tool in the realm of educational and travel technology. Featured at WWDC, our app has not only received acclaim but also showcased our vision for a more interconnected and accessible world through smart technology.”

Conclusion

In conclusion, Frame Sixty’s AI Translate is not just an app but a technological leap forward, addressing the fundamental need for seamless communication across languages and cultures. Its recognition at the WWDC underscores its excellence in harnessing Apple’s AI and machine learning prowess, delivering instant and accurate object and text translations in over 100 languages. Designed with travelers and visually impaired users in mind, AI Translate transcends the typical app experience by offering a solution that works locally on devices, ensuring speed and reliability without the need for constant internet access. By integrating OCR and on-demand ML model downloads, the app remains at the forefront of innovation, empowering users to explore and interact with the world around them without language constraints. This app is a must-have for global explorers and language enthusiasts alike, ready to be downloaded from the App Store to redefine the way we understand and communicate in our diverse world.

More Projects

Show More