1. Introduction

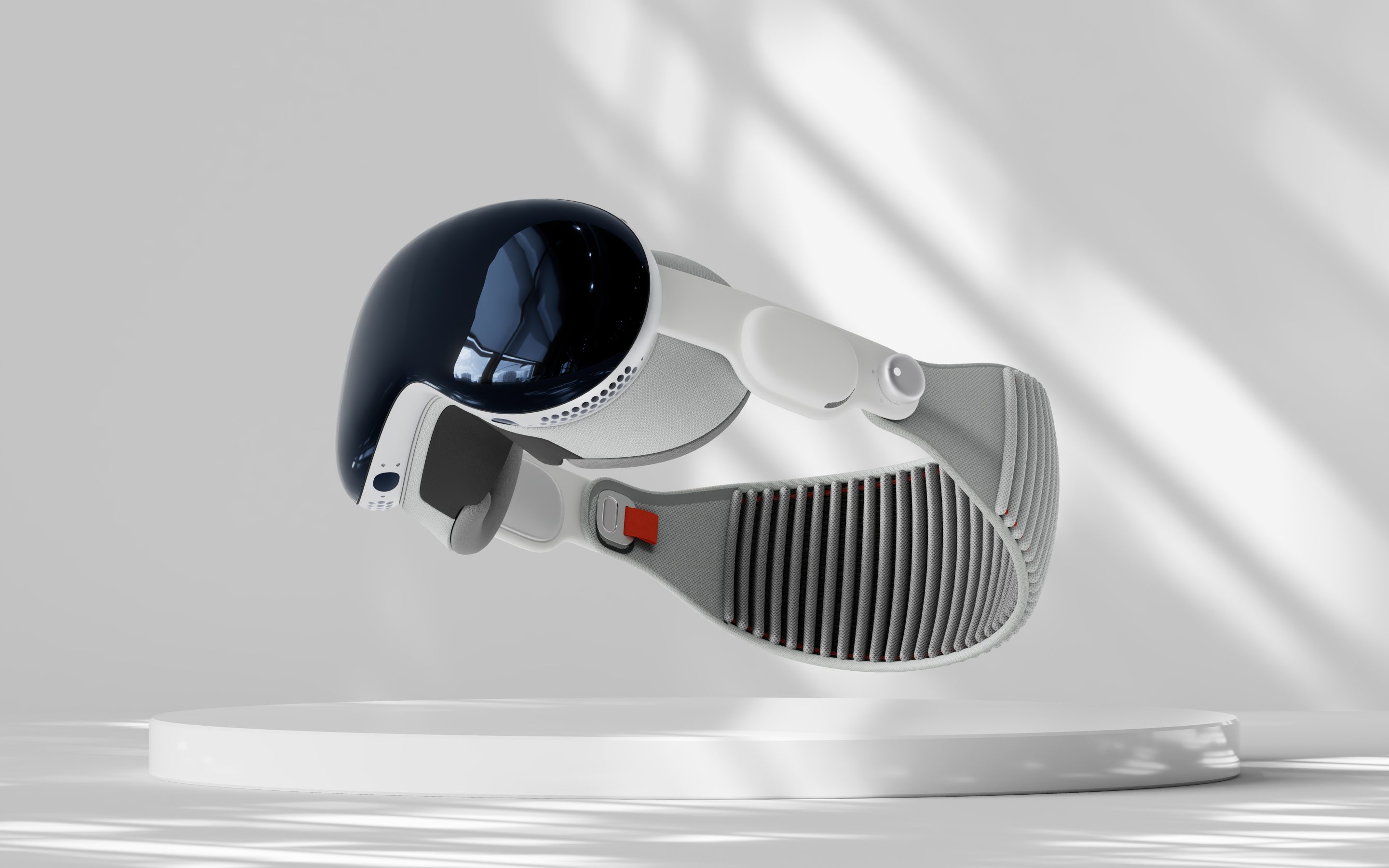

Personal Reflections: Two Years of Vision Pro Development

I’ve been actively developing software solutions for the Apple Vision Pro for over two years now, and I must say, it feels like standing at the edge of a whole new reality. In those 24 months, my team and I have delved deeply into creating apps for the Apple Vision Pro. I’ve gained unique insights into its disruptive potential—from medical technologies to immersive environments and pioneering developments in spatial video and computing. Here, I want to share a perspective often reserved for those on the inside, revealing breakthroughs that might otherwise remain unseen by the general public.enterprise and consumer use.

Defining Apple Vision Pro’s Significance

Why should you care about Apple Vision Pro? Beyond the novelty, the device represents the next wave of personal computing. We’ve gone from mainframes to desktops, from desktops to laptops, and from laptops to smartphones. Now, we’re stepping into a realm where you can wear your digital workspace, data overlays, and AI interfaces directly on your head, freeing your hands. For me, it’s remarkable that we’re essentially merging the digital and physical worlds in real-time. The significance goes beyond gaming or entertainment—though those are fun, too. It’s about using technology in a way that seamlessly integrates with our environment, rather than confining us to the glowing rectangle of a traditional screen.

2. Developer’s Lens: Breakthroughs and Enterprise Innovations

Spatial Video

Another area that Apple Vision Pro truly shines in is spatial video. Think of spatial video as the natural evolution of traditional 2D media, where you’re no longer just watching something on a flat screen. Instead, you feel enveloped by a scene, free to walk around or lean in to see details from different angles. The potential extends well beyond entertainment. Educational institutions are exploring ways to record lectures or medical procedures in 3D, allowing students to virtually “step into” these sessions later. Architects, meanwhile, can create immersive project walkthroughs for clients, giving them a lifelike preview of a building still under construction.

Spatial Computing

This leap from flat to spatial is part of a broader shift toward spatial computing. Apple Vision Pro merges the digital and physical in ways that let you move around and manipulate information naturally. It’s no longer about tapping an icon on a screen. It’s about turning your head to select a digital panel on the wall or pinching the air to rotate a 3D object. Over time, as more developers tap into this potential, we’ll see entirely new categories of applications that utilize these volumetric experiences.

Privatized Enterprise Innovations

Here’s where many critics often miss the mark: the real breakthroughs I’ve seen are happening largely behind closed doors. Enterprises are developing specialized applications for training, remote collaboration, simulations, and more. They’re not exactly tweeting about it. These internal tools might never reach the public, but they’re refining workflows, improving safety, and delivering cost-savings at scale. While the average consumer might grumble about the Vision Pro’s price tag, major corporations see it as an investment—potentially saving millions in operational costs, travel expenses, or training inefficiencies.

3. AI Integration in Apple Vision Pro

Advanced Classification and Data Visualization

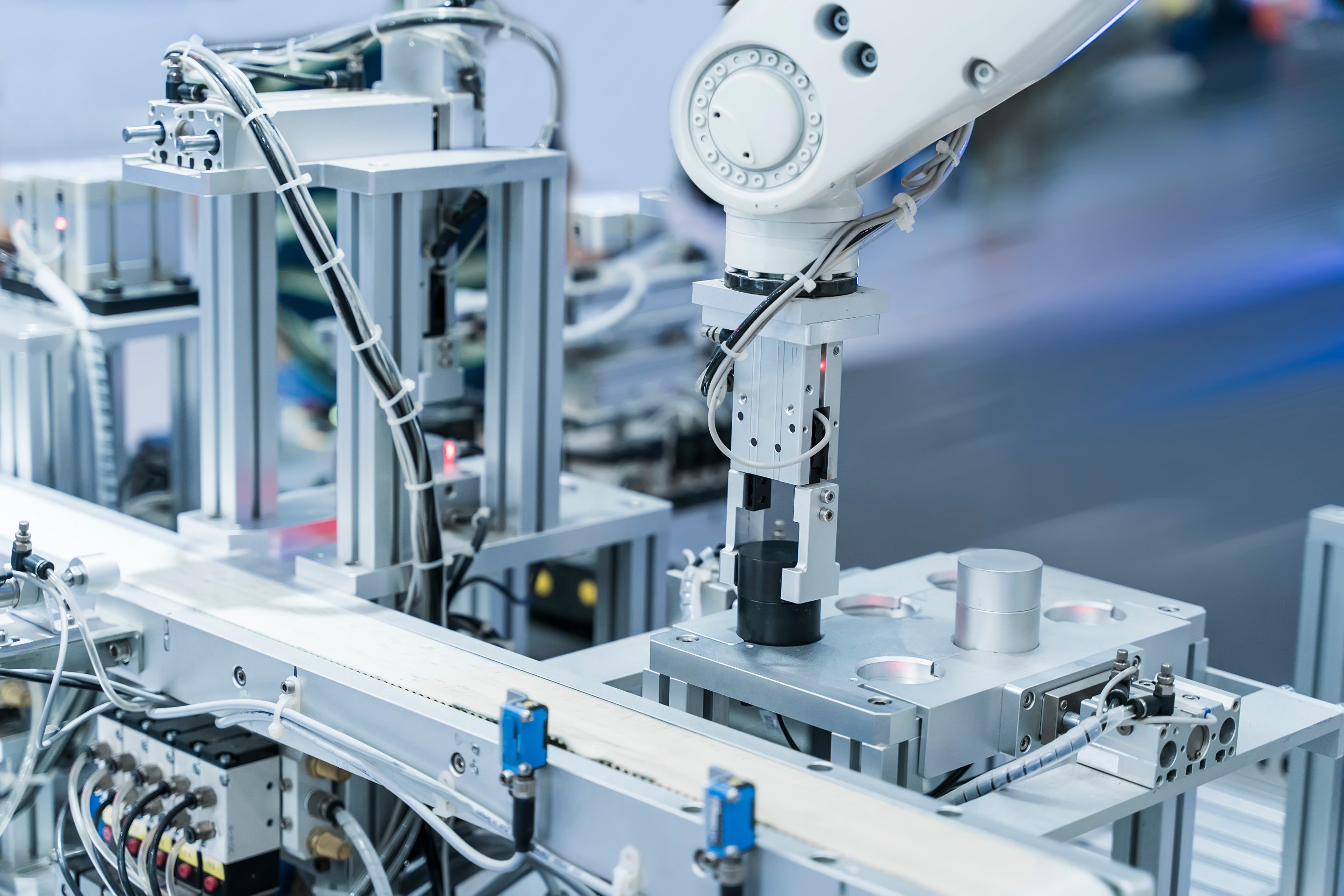

One of the most thrilling aspects of developing on the Vision Pro is its tight integration with AI models. Traditionally, you’d pull out your smartphone, point the camera, and let an app classify the objects in your environment—like scanning a plant to see if it’s a succulent or a fern. Now, with Vision Pro, that entire process happens right before your eyes, hands-free. You look at an object, the AI identifies it, and the device can overlay useful data in real time. It’s almost like you’re wearing a pair of scientific goggles that decode the world around you.

I have seen classification models that help field technicians identify faulty machinery. When the user glances at a worn-out gear, an alert pops up, suggesting potential fixes or part replacements. Another scenario involves inventory management, where a quick gaze at a stack of boxes can confirm stock levels, shipping details, or possible misplacements. The big leap is that your hands remain free to interact with physical objects. There’s no more juggling a phone or scanning barcodes manually. It’s all spatial, direct, and immediate.

Hand Tracking

Perhaps the project closest to my heart is one that harnesses the Vision Pro’s hand tracking to interpret sign language. Here’s how it works: the device’s cameras capture hand poses and movements in real time. The feed goes into an AI model trained on various sign language gestures. The system then translates these gestures into text or spoken words, displayed in the user’s field of view. It’s an extraordinary tool for bridging communication gaps between the Deaf and hearing communities.

I recall the moment our team successfully interpreted a complete sentence using sign language in real time. It felt magical. Everything from the precision of the sensors to the processing power of the AI engine inside Vision Pro had to align perfectly. The immediate applications are vast—healthcare settings, educational institutions, and everyday social interactions. Over time, this could expand to interpret multiple sign languages and even regional dialects. You can read more about our project here.

Future Outlook for AI and AR

With the Vision Pro, Apple is laying the foundation for a world where AI is always available, always aware, and always contextually relevant to your surroundings. We’re already seeing the hardware and software bridging the gap between digital data and the physical environment. Soon, you might walk through a supermarket, glance at products, and see nutritional information, recipes, or coupons pop up automatically. Or you could be in a library, scanning titles on a shelf, and instantly access reviews or author bios.

This blend of AR and AI is so potent that I see it as a parallel evolution to the meteoric rise of ChatGPT and other AI technologies. While large language models transform how we process and generate text, Apple Vision Pro is transforming how we perceive and interact with the world visually. The synergy between them feels inevitable—imagine combining the advanced reasoning powers of AI with the contextual awareness of AR. We’re not just heading toward incremental upgrades; we’re on track for a paradigm shift in how humans consume and create information.

4. Enterprise Use Cases and Collaborative Possibilities

Enhancing Productivity and Hands-Free Interaction

In corporate environments, a hands-free interface can be a colossal boon. Picture an architect walking around a construction site while wearing the Vision Pro, referencing blueprints overlaid onto the physical framework. Or a manufacturing operator receiving step-by-step assembly instructions in real time without ever pausing to scroll through a manual. In warehousing, a manager can instantly locate inventory items by scanning the shelves—no more rummaging for barcodes or flipping through clipboards.

These scenarios are not science fiction. They’re real pilot programs I’ve seen. One enterprise client I partnered with created a custom application that combined real-time data from their industrial IoT sensors with the AR overlay. The result? Supervisors could roam the factory floor, seeing live readouts of temperature, pressure, and equipment status just by looking at various machines. It dramatically improved their response times and helped them catch small issues before they erupted into major delays.

Driving Change in Healthcare, Manufacturing, and Education

Healthcare remains a major beneficiary of the Vision Pro. Educational programs for medical students can place them right in the middle of a simulated surgical procedure—no longer just reading about techniques but visually interacting with them as if they were physically present in the operating room.

Similarly, manufacturing lines benefit from upskilling workers rapidly. Instruction manuals come to life as interactive 3D guides, and new hires can see each assembly step highlighted on the actual parts, reducing confusion and errors. Education, in a broader sense, is also poised for transformation. From virtual field trips to labs that let students manipulate molecular structures in mid-air, the Vision Pro can spark creativity and understanding through deeply engaging lessons. The learning potential is almost unlimited when you can immerse yourself in a subject rather than passively read about it.

5. Competition and Parallel Paths

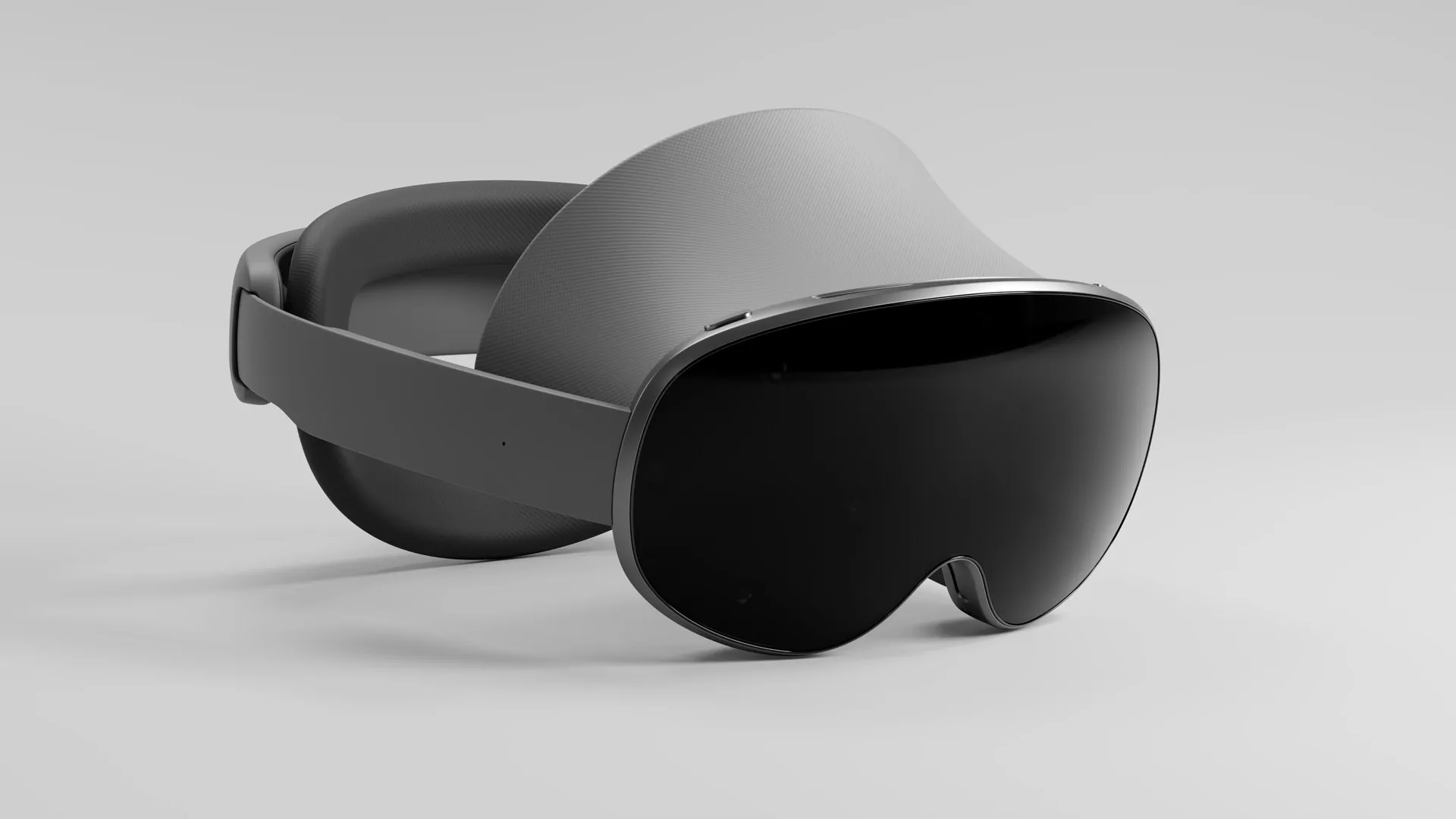

Rival Headsets: Facebook Orion, Samsung Project Moohan

Apple isn’t the only player in the AR space. Companies like Meta (Facebook) have been developing “Orion glasses,” while Samsung and Google later in 2025 are releasing their next-gen headset “Project Moohan”. Each contender has its unique slant—some focus on lighter form factors, some on specialized enterprise functionalities, and others on social interactions or gaming. While competition can be fierce, it’s actually good news for the industry. More entrants mean more innovation, better prices, and faster improvements.

AR and AI Convergence: The Next Big Thing

We’re living in an era where AR and AI are both advancing at a breakneck pace. While AI is powering natural language models, speech recognition, and intelligent automations, AR is pushing boundaries in how we physically engage with digital information. When these paths converge entirely, the potential is staggering. You might have an AI that understands your preferences, can hold a conversation about your surroundings, and can manipulate those surroundings in augmented reality. Imagine walking through your home and asking an AI to redesign your interior. You’d watch the furniture shift, walls repaint, and décor rearrange in real time, all based on your spoken instructions.

This synergy isn’t just futuristic daydreaming—it’s already happening in small steps. The Vision Pro’s sign language translation feature is an early glimpse of AI-AR convergence. Another example might be real-time language translation for travelers. As you walk through a foreign city, signs appear in your native tongue, and store clerks’ voices are automatically subtitled in your field of view. The technology to do all of this is in motion right now, and the Vision Pro is one of the leading vehicles driving us there.

6. The Road Ahead

From Bulky Headsets to Reading Glasses and Neural Implants

Critics of AR headsets often complain about how heavy or cumbersome they are. And yeah, today’s versions can feel a bit bulky for prolonged wear. But technology never stands still. Compare the original mobile phones to modern smartphones, or the first personal computers to the ultralight laptops of today. We see the same trajectory for AR headsets. Sooner than we think, we’ll have reading glass-sized devices, or even contact lenses, that deliver the same immersive experience we currently get from a Vision Pro.

Building the Infrastructure for Tomorrow’s Industries

It’s not just about headsets or contact lenses. We need a robust ecosystem that includes high-speed connectivity (like 5G or beyond), powerful edge computing solutions, and developer-friendly platforms to power these experiences. Enterprises are already paving the way by deploying private 5G networks in factories, ensuring low-latency data transmission for real-time AR. Educational institutions are experimenting with immersive classrooms. Retailers are creating virtual stores accessible via AR. All of these moves lay the groundwork for an environment where AR is as standard as the internet or smartphones are today.

In my personal roadmap, I see a world where architecture, medicine, education, entertainment, and everyday life converge in a single immersive layer. You won’t have to consciously think about “entering AR”—it’ll just be there when you need it, invisible when you don’t. Apple Vision Pro is the stepping stone, the transitional device that introduces the masses (and big corporations) to the staggering possibilities of an augmented future.

7. Addressing Critics and Limitations

Pricing, Access, and Enterprise-Only Breakthroughs

Of course, the Vision Pro is not without its critics. The cost can be prohibitive, especially for individual consumers who might only use it for gaming or occasional entertainment. This is exactly why so many breakthroughs remain in the purview of enterprise R&D departments. Companies have the budget and the incentive to adopt cutting-edge solutions, which quickly pay off in productivity gains or competitive advantages.

Still, it’s important to note that Apple’s history suggests hardware evolves rapidly, and prices often drop over time. Today’s high-end device might spawn a more affordable variant in the future. Once you witness what’s happening behind corporate doors—like advanced med-tech applications, real-time translations, and massive operational savings—the price point makes more sense from an enterprise standpoint. But it may take a while for these developments to trickle down to consumers in a format that’s both accessible and economically feasible.

8. Conclusion

Why Apple Vision Pro May Be the Greatest Invention of the Decade

After working hands-on with the Vision Pro for two years, I can confidently say it’s more than just another gadget. It’s the culmination of decades of research in sensor technology, AI, and user interface design—packaged in a way that can truly reshape industries. Med-tech, immersive gaming, enterprise training, sign language translation, advanced classification systems—the applications are mind-blowing, and we’re only scratching the surface.

As a developer, I’ve had the unique pleasure of peeking behind the corporate curtain, witnessing how major companies are already embracing Vision Pro to change the face of productivity, training, and communication. Despite the hurdles of cost, privacy concerns, and hardware miniaturization, the Vision Pro is forging a path that other headsets and platforms can follow. When you combine Apple’s ecosystem with the unstoppable force of AI-driven advancements, the result is a new paradigm of computing that merges the physical and digital worlds in real time.

A Developer’s Final Word on AR’s Future

We’re standing on the edge of a revolution—one that goes hand in hand with AI’s meteoric rise. While AI helps us parse data and automate tasks, AR reshapes how that data is presented and interacted with. The Apple Vision Pro exemplifies how these two forces can collaborate to deliver experiences that once seemed like pure science fiction. And this is just the first iteration.

Yes, other headsets like Facebook’s Orion glasses, Samsung’s rumored AR device, and Google’s new concepts are on the horizon. But from my perspective, this competition only fuels a healthier, more robust ecosystem. It ensures that, as developers, we keep pushing boundaries, refining applications, and improving user experiences. In a decade, we might reminisce about the days when AR headsets were still a novelty—and marvel at how quickly they became as ubiquitous as smartphones. If you ask me, that future can’t come soon enough. And Apple Vision Pro is leading us there, step by step.

Frame Sixty is at the forefront of Vision Pro development, combining years of expertise in augmented reality with a passion for spatial computing. We partner with both startups and major enterprises to craft bespoke solutions that push the boundaries of mixed reality. If you’re looking to accelerate your next project or simply explore how Vision Pro can transform your industry, we invite you to reach out and book a meeting to discuss how we can bring your vision to life.

FAQS

Below is a concise FAQ section divided into three related categories. Each category features questions and answers from the perspective of an app development agency that specializes in creating custom chatbot solutions.

How does spatial computing differ from regular AR experiences?

Spatial computing goes beyond overlaying digital objects on a flat plane. It lets users move around and manipulate 3D information in a natural way, creating immersive experiences that feel more intuitive and realistic than standard AR overlays.

Is it difficult for developers to build for Apple Vision Pro?

Apple provides developer tools and documentation to ease the learning curve. If you’re familiar with Apple’s ecosystem (e.g., Xcode, ARKit, Swift), transitioning to Vision Pro development typically involves extending your existing AR knowledge to spatial computing concepts.

How is Vision Pro expected to evolve over time?

Current headsets might seem bulky and specialized, but industry trends suggest future versions will become lighter, more affordable, and possibly evolve into reading glasses or even contact lenses—expanding AR adoption across all markets.

Can Vision Pro be easily integrated into existing enterprise workflows?

Yes. Many companies use private 5G networks and IoT data pipelines to feed real-time info into Vision Pro apps. With the right development and infrastructure, integration can be surprisingly seamless and yield substantial efficiency gains.